MIT researchers want you to decide how self-driving cars should make moral decisions. It’s a modern – and potentially real – twist on a classic hypothetical ethics problem.

The trolley problem, in its most simplest form, asks you to imagine you are driving a train and find you are about to hit five people who are on the track ahead. There’s no time to stop the train or for the people to get out of the way, but you could divert it onto another track where you spot one person in the way.

The question is whether you divert and, at its simplest, the choice is between the more rational decision of minimizing the casualties by diverting and the more philosophical view that actively choosing to divert makes you responsible for killing the one person whereas staying on track means the five deaths are nobody’s fault. Variants include making the one person a relative or friend of yours.

In normal car driving, people occasionally have to make similar decisions when a crash is imminent and, for the most part, it’s accepted this is just down to the individual to respond. However, with self-driving technology now a reality, philosophers, lawmakers and manufacturers alike are weighing up if and how cars should be programmed to make such moral decisions.

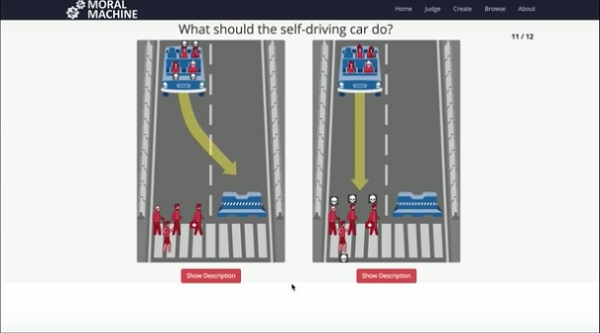

The MIT project, Moral Machine, asks participants to explore such dilemmas and say which decision they’d like to see the car make. One example involves a decision of whether to continue down a road and hit five pedestrians, or swerve and hit a bollard, killing two passengers in the car.

After making the decision, you can check how other people responded. You can even create your own scenarios.